Four generations of rovers have crossed the red planet collecting clinical data, sending evocative photographs, and surviving incredibly complicated situations, all onboard computers are less resilient than an iPhone 1. The last rover, Perseverance, was introduced on July 30, 2020. and engineers are already dreaming of a generation of long-term rovers.

Although this is a major achievement, these missions have scratched the surface (literally and figuratively) of the planet and its geology, geography, and atmosphere.

“The dominance of Mars is about the same as the general dominance of Earth on Earth,” said Masahiro (Hiro) Ono, leader of the robot surface mobility organization at NASA’s Jet Propulsion Laboratory (JPL), which led all the rover’s missions. to Mars, and one of the researchers who developed the software to make the existing rover work.

“Imagine, you’re an alien and you know almost nothing about Earth, and you land on seven or 8 topics on Earth and travel a few hundred kilometers. Does this alien species know enough about Earth?” Ask Ono. I said, “No. If we want to constitute the enormous diversity of Mars, we will want more measurements on the ground, and the key is a significantly long distance, covering thousands of kilometers.”

Travelling through the varied and damaging terrain of Mars with limited computing force and limited force, both sun and rover can capture and turn into force on a single Martian day or soil, it’s an overwhelming challenge.

The first surface vehicle, Sojourner, covered 330 feet on 91 floors; the second, Spirit, traveled 4.8 miles in approximately years; Opportunity, traveled 28 miles for 15 years; and Curiosity has covered more than 19 kilometers since landing in 2012.

“Our team relies on the autonomy of Mars robots to make long-range scouting vehicles smarter, for safety, productivity and especially to drive faster and farther,” Ono said.

New hardware, new possibilities

The Perseverance rover, which was introduced this summer, calculates with the help of the RAD 750, radiation-reinforced single-card computers manufactured through BAE Systems Electronics.

However, future missions may use new high-performance, radiation-reinforced multicore processors designed as a component of the High Performance Spaceflight Computing (HPSC) project. (Qualcomm’s Snapdragon processor is also mission tested). These chips will provide approximately one hundred times the computational capacity of existing flight processors with the same amount of power.

“All the autonomy you see in our new Mars rover is largely human on the circuit,” which means it requires human interaction with paintings, according to Chris Mattmann, deputy director of generation and innovation at JPL. “Part of the explanation of why the limitations of the processors that paint on them are. One of the main missions of these new chips is to perform deep learning and learning of devices, as we do in terrestrial mode, on board. What are the deadliest applications? given this new IT environment? “

The MaARS (Machine Learning-based Analytics for Autonomous Rover Systems) program, which began 3 years ago and will end this year, encompasses a variety of spaces where synthetic intelligence can be useful. The team presented the effects of MAARS allocation on the hIEEE aerospace convention in March 2020. The assignment was a finalist for the NASA Software Award.

“High-performance ground computing has led to advances in autonomous vehicle navigation, device learning, and knowledge research for terrestrial applications,” the team wrote in their IEEE article. “The biggest impediment to a mars exploration deployment of such advances is that maximum productive computers are on Earth, while the most valuable knowledge is on Mars.”

Training device learning models on the Maverick2 supercomputer at the Texas Advanced Computing Center (TACC), as well as in The Amazon Web Services and JPL clusters, Ono, Mattmann and their team have developed two new features for long-running Mars rovers, which they call Drive-Through Science and Optimal Energy Navigation.

Optimized energy navigation

A team component that wrote the onboard guidance software for Perseverance. Perseverance software includes some device learning capabilities, however, the way you perform trail search remains quite naive.

“We would like long-haul rovers to have the human ability to see and perceive the terrain,” Ono said. “For rovers, power is very important. There’s no paved road on Mars. The pipe varies significantly depending on the terrain, for example, the beach in relation to the rocky bed. That’s not being considered lately. Finding a path with all those limitations is complicated, but that’s the calculation point we can solve with HPSC or Snapdragon chips. But to do that, we’re going to have to replace the paradigm a little bit.”

Ono explains this new paradigm as a commander through politics, a floor that is nothing unusual between human dictation: “Go from A to B and Beam C,” and the purely autonomous: “Go and do science.”

The command through politics comes to pre-plan a variety of scenarios and then allow the rover to face the situations it encounters and what to do.

“We use a supercomputer in the field, where we have infinite computing resources like those of the TACC, to expand a plan where a policy is: if X, do this; Yes and, do that,” Ono explained. “Let’s create a massive to-do list and send gigabytes of knowledge to the cell phone, compressing them into bulk tables. Then we’ll use the upper force of the cell phone to decompress the policy and execute it.”

The pre-planned list generates optimizations derived from device learning. The built-in chip can use those plans to make inferences: take inputs from your environment and connect them to the pre-trained model. Inference responsibilities are much less difficult in terms of calculation and can be calculated on a chip such as those that can accompany long-lasting rovers on Mars.

“The rover has the flexibility to replace the aircraft on board just by sticking to a number of pre-planned options,” Ono said. “This is vital in case something bad happens or if you discover something interesting.”

Science the wheel

According to Mattmann, existing projects on Mars generally use dozens of symbols from a rover’s Sun to know what to do the next day. “But what if in the long run we use a million symbol legends? This is the basic precept of Drive-By Science,” he said. “If the rover can roll back text labels and captions that have been scientifically validated, our project team would have much more to do.”

Mattmann and the team adapted Google’s Show and Tell software, a neural symbol subtitle generator first introduced in 2014, for mobile missions, the first non-Google technology app.

The rule set takes photos and spits out human-readable legends. These come with fundamental but essential information, such as cardinality: how many rocks, how far away? – and housing such as the arrangement of veins in outcrops near the rocky bed. “The types of clinical wisdom for which we have lately used photographs for what’s interesting,” Mattmann said.

In recent years, planetary geologists have tagged and organized annotations of specific photographs of Mars to drive the model.

“We used the million legends to locate a hundred more vital things,” Mattmann said. “By using the search and data extraction functions, we can prioritize goals. Humans are aware, but they get a lot more data and can search for them much faster.”

The effects of the team’s paintings are included in the September 2020 factor of Planetary and Space Science.

TACC supercomputers were essential to help the JPL team check the system. In Maverick 2, the team trained, validated and stepped forward with its 6,700 style labeled by experts.

The skill of much more would be a necessity for Mars rovers in the long run. An example is the Sample Fetch Rover, proposed to be developed through the European Space Association and introduced in the last decade of 2020, whose main task will be to take samples unearthed through the Mars 2020 rover and collect them.

“These rovers over a multi-year era drive 10 times more than previous rovers to collect all the samples and take them to a dating location,” Mattmann said. “We’ll have to be smarter in the way we drive and use energy.”

Before new models and algorithms are loaded into an area rover, they are tested on a ground floor next to the JPL that serves as a terrestrial analogue for the surface of Mars.

The team developed a demonstration showing an aerial map, transmitting photographs collected through the rover and algorithms running live on the rover, and then exposing the rover by sorting and subtitling the terrain on board. They were hoping to finish testing the new formula this spring, but COVID-19 closed the lab and testing was delayed.

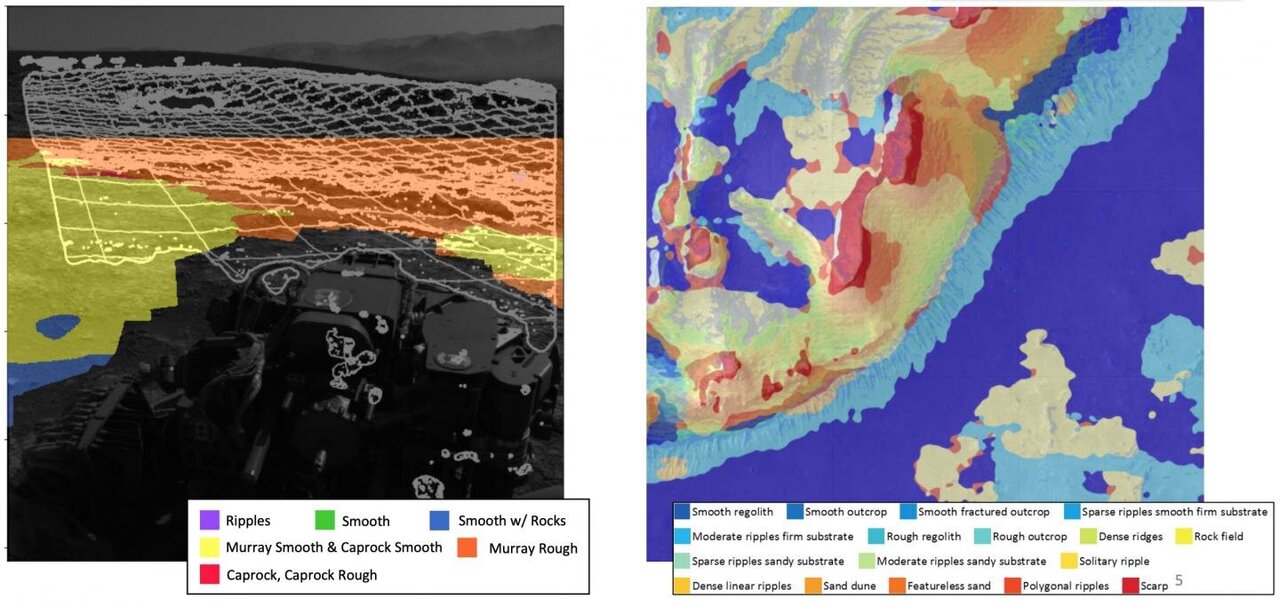

Meanwhile, Ono and his team have developed a citizen science app, AI4Mars, that allows the public to annotate more than 20,000 photographs taken through the Curiosity rover. These will be used in the best device learning algorithms to identify hazardous terrains.

The public has generated 170,000 labels to date in less than 3 months. “People are excited. It’s an opportunity for other people to get by,” Ono said. “The labels that other people create will make the rover safer.”

Efforts to expand a new AI-based paradigm for long-term autonomous missions can only be implemented on rovers, but also on any autonomous area mission, from orbiters to flyovers and interstellar probes, Says Ono.